No, ChatGPT isn't ruining crowdsourcing

ChatGPT isn't worsening data quality issues on crowdsourced platforms.

In this 7-minute read we’ll discuss a study that examines the impact of ChatGPT on crowdsourced tasks.

After completing this article I reached out to the authors for comment. They were kind enough to provide a detailed response, which I have added as an addendum to the bottom of this article with their permission.

What’s happening?

There’s a new study circulating that looks at how ChatGPT is being used by participants from Amazon’s Mechanical Turk crowdsourcing service (MTurk) to help them complete text annotation and summarization tasks. In a brilliant moment of branding participants on the platform were designated “Turkers.” The study concludes that a substantial portion of Turkers, about 40%, are leveraging ChatGPT, or similar tools, which raises concerns about the diminishing role of human involvement in task completion and the potential impact on data quality.

This study gained attention on Twitter, with retweets from economist Alex Tabarrok and a brief thread by Wharton Professor Ethan Mollick who writes over at One Useful Thing. I generally like Mollick’s work on AI and education and recommend checking out his newsletter. That said, as we’ll discuss, I think he was a little too enthusiastic on this one (in fairness to Mollick this is Twitter we’re talking about).

If you’re unfamiliar, MTurk is a crowdsourcing marketplace that facilitates the outsourcing of simple, rote tasks to a distributed workforce capable of performing these tasks remotely. Turkers are typically compensated with small amounts, sometimes less than a dollar, for each task they complete.

Launched in 2005, MTurk was originally used for transcription, image annotation, rating sentiment, and similar tasks, but Turkers eventually became a go-to group for social science research.1

This particular study — which I’ll refer to as “GPTurk” following the authors’ convention — was designed specifically to detect levels of Large Language Model (LLM) usage amongst participants. Turkers were asked to summarize medical journal abstracts, condensing approximately 400-word abstracts into 100-word summaries.2 The researchers then utilized a machine-learning model to estimate the number of study participants who likely used ChatGPT or a similar language model in their summarization activity. This estimation was achieved by fine-tuning a model based on both human and machine-generated summaries, as well as by cross-referencing against participant actions such as "copy and paste," which the researchers were able to track through keyboard stroke data.

The full name of GPTurk is “Artificial Artificial Artificial Intelligence: Crowd Workers Widely Use Large Language Models for Text Production Tasks,”3 which is perhaps overstating the results. The paper’s conclusion does the same:

Here we found that crowd workers on MTurk widely use LLMs in a summarization task, which raises serious concerns about the gradual dilution of the “human factor” in crowdsourced text data.

Or as as Mollick put it, “Mechanical Turk is just AI now.”

The study doesn’t support those conclusions

First, it’s important to call out that GPTurk was a small-sample study. There were 44 total Turkers, who completed 46 total tasks. (There were a number of different passages to be summarized so Turkers were able to complete multiple summaries). The authors estimate that between 33% and 46% of participants likely leveraged an LLM, which translates to between 15 and 20 participants.

Based on the most recent reliable data I found from 2018, the estimated total population of Turkers on MTurk is around 100,000. Therefore, this study, with its 44 participants, represents only a minute fraction of the total population, accounting for just 0.04%.4

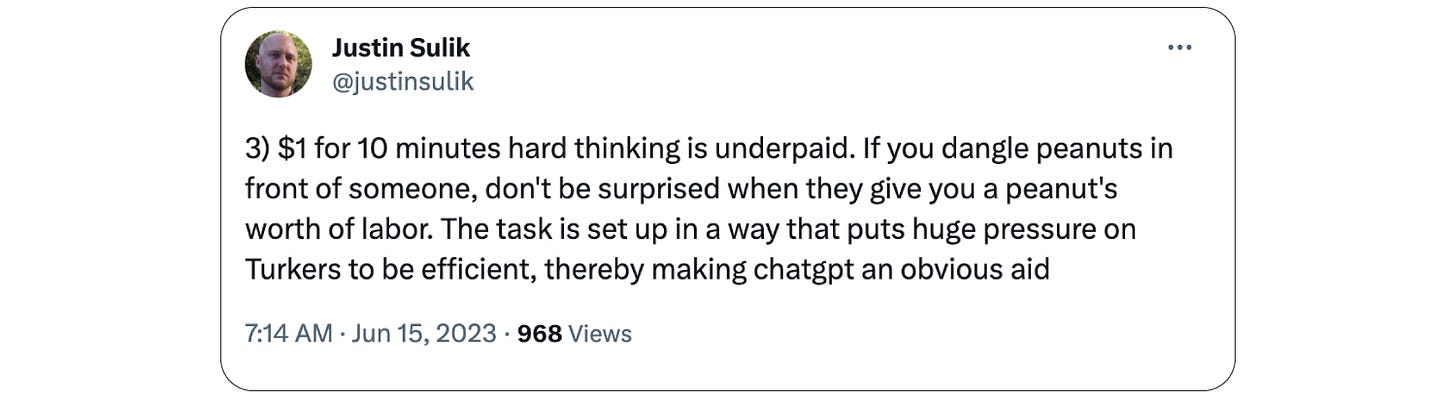

Shifting our focus to broader criticisms, it’s worth grounding our discussion using a short Twitter thread from Justin Sulik, a postdoc researcher at LMU Munich who has studied crowdsourcing and study methodology. Sulik linked to GPTurk and offered a number of critiques, which I’ll summarize thusly:

Of course MTurk has data quality issues!

Study goals should be aligned to study methodology, including participant selection. MTurk isn’t the right solution for all studies.

Pretest your study questions and tasks!

Participants respond to instructions. If you prefer study participants not use ChatGPT you should say that explicitly.

People respond to incentives. If you pay someone a below-market wage for a task, (like $1 to produce high-quality text summarization), don’t be surprised if they utilize labor-saving technologies (like ChatGPT).

Be a good human. Tell Turkers what you want and pay them appropriately.

I’d like to build on Sulik’s response in part by invoking research best practices, but also by reanalyzing the GPTurk data itself. Kudos to the authors for making the data available as that allows a deeper analysis of the results. It’s only fair I do the same: here is my GitHub repository for my analysis of the study.

Clear instructions

Let's begin with the instructions provided to respondents for the task:

Instructions

You will be given a short text (around 400 words) with medicine-related information.

Your task is to:

Read the text carefully.

Write a summary of the text. Your summary should:

Convey the most important information in the text, as if you are trying to inform another person about what you just read.

Contain at least 100 words.

We expect high-quality summaries and will manually inspect some of them.

As Sulik identified, the instructions do not specify the rules for the task. Can participants copy and paste from the original article? Are they allowed to use Google to look up unknown medical terminology? Can they utilize ChatGPT as an aid? Who's to know? These specifics are not provided in the instructions.

Participant recruiting and data quality issues

In his Tweet sharing out GPTurk Mollick linked to a previous Twitter thread with criticism of bad MTurk study design. His thread included a reference to, “An MTurk Crisis? Shifts in Data Quality and the Impact on Study Results” by Michael Chmielewski and Sarah Kucker. Chmielewski and Kucker note that overall MTurk quality is declining, but that, “[T]hese detrimental effects can be mitigated by using response validity indicators and screening the data.”

Many of the MTurk best practices should be familiar to researchers as they are simply ported over from decades of learning how to administer surveys properly. The MTurk blog even has an article on how to identify workers that will be good at task completion.

As Selik and coauthor Christine Cuskley put it their 2022 preprint, “The burden for high-quality online data collection lies with researchers, not recruitment platforms.”

The authors of GPTurk don’t discuss their recruitment strategies, validation processes, or approach to pretesting so it's impossible to know if these best practices were followed.

Participant pay

In GPTurk the authors state the task pay was $1, but according to the “Reward” column5 in the dataset they provided it was even less, just $0.90.6 What does that work out to in terms of an implied hourly rate? Well, that depends on how long the task is expected to take.

The authors claim the implied hourly rate is $15/hr7 based on the estimate that the summarization task would “conservatively” take 4 minutes to complete. This 4 minute estimate seems dubious for a few reasons.

First, the authors themselves note that the task is “laborious for humans while being easily done with the aid of commercially available LLMs.” Correspondingly, the authors set the allotted task time for participants to an hour!8

Second, the theoretical foundations of the estimate don’t add up. Skilled readers typically read at a speed of about 300 words per minute,9 which means the passage itself would take a minimum of 80 seconds to read (and let's not forget, it contains dense medical terminology).10 Additionally, the average adult typing speed is around 50 words per minute,11 so typing the 100-word summary would take approximately two minutes. This leaves a mere 40 seconds to contemplate a comprehensive and “high-quality summary” of a complex medical abstract. This theoretical estimate does not seem plausible.

But don’t take my word for it! Well, do take my word for it, but relying on data from the study itself. The dataset includes a “work time” column so it’s possible to examine how long it took participants to actually complete the summarization task. When we examine times from this column, we find that they are much longer than four minutes. Out of the 46 summary write-ups, only 5 were completed in four minutes or less. Depending on the measure of central tendency you prefer, the typical Turker in the study took anywhere between 8 minutes and 26 minutes to finish the task. The most experienced Turker in the sample took 31 minutes.12

Contrary to the hourly pay claimed by the authors — $15/hr — accounting for the actual reward of $0.90 and the typical work times, the resulting hourly wage is somewhere between $6.75/hr and $2.08/hr, using the modal and mean work times respectively.

These figures are actually in line with Turker hourly pay rates,13 though the pay could create a self-selection problem since Turkers opt-in for tasks based on task reward and predicted effort. Amongst experienced Turkers tasks that pay $1 are expected to net out to $10.67 per hour as they are more cognitively demanding.14

But is the $1 payment in GPTurk (again really $0.90) in line with comparable tasks from other requestors? The comparability aspect is hard for me to judge, but we do know that Turkers coordinate and try to identify good requesters via tools such as Turkopticon.15

As a quick check I actually signed up to be a Turker and browsed available tasks. Here are a few data points as reference:

Oleg Urminsky from the University of Chicago’s Booth School is paying $1 for a 3-4 minute survey.

HUGC is paying $0.05 cents to moderate hotel reviews.

SFR is paying $11 to evaluate the the quality of news article summaries.

Here’s what Sulik had to say on Twitter about the pay issue.

Ultimately, it’s hard for me to know what to make of Selik’s pay critique. There is certainly a fairness aspect to consider, but also the pay’s influence on ChatGPT usage. I agree that $1 for 10 minutes of hard thinking is underpaid; that said, the task was opt-in, and based on the available data, a significant number of Turkers willingly invested considerable time completing it.

As a follow-up it might be interesting to partially replicate GPTurk with varied pay to determine if that has an impact on the propensity to use ChatGPT.16

Conclusion

GPTurk asks an important question, but the study methodology doesn’t support the conclusion that there are substantial concerns regarding humans using LLMs on MTurk text tasks or in crowdsourced research more broadly.

To bolster their conclusion the authors would need to present a compelling case for external validity, demonstrating how the findings from a mere 44 participants can be generalized to show broader crowdsourcing scenarios involving LLM usage. Crucially, they’d want to show this for high-quality Turkers — the subset researchers and other requestors with critical human-centered needs should be leveraging for important task completion — as any undisclosed LLM usage within this group would raise potential concerns regarding the validity of the results.

One way the authors might achieve this conclusion is through showcasing a rigorous recruitment process and by demonstrating that participants were provided clear instructions. If it could be shown, via robust screening and validation, that participants in this study were high-quality Turkers, and if they were explicitly instructed not to use ChatGPT, and yet still a substantial portion chose to leverage an LLM, that might indeed raise concerns.17 (Though personally even then I would like to see the study replicated with a larger sample size and wider variety of text-based tasks).

Instead, in my interpretation, the authors' findings suggest that some members of the general population of one crowdsourcing platform (MTurk) might be inclined to use ChatGPT for relatively complex text summarization tasks when offered average (below average?) pay, in the absence of clear instructions disallowing the use of language models. There are a lot of caveats in there.

In my view the authors are merging two related but distinct concepts: the emergence of LLM usage for task completion and the issues associated with that usage. The utilization of LLMs for rote and laborious text tasks is undoubtedly on the rise, but that’s a good thing! It enhances the efficiency of Turkers and potentially enables requestors to directly employ LLMs, bypassing the need for crowdsourcing altogether. But that’s a different state of affairs than LLMs universally “diluting the human factor.” The human factor remains intact in crowdsourcing when we align task objectives, participant selection, and study design with the right crowdsourcing platform and methodology.

Addendum: Response from authors

The following response is from Manoel Ribeiro, one of the paper’s authors.

I sincerely thank you for taking the time to read and critique our paper.

I agree with some of the points you made, namely that:

The sample size is small.

They were not explicitly told NOT to use ChatGPT.

We did not extensively pre-test our sample.

Pay should have been higher.

Concluding that "Mturk is just chatgpt" is silly.

Some orthogonal comments/things I disagreed with:

Note that the notion of "time to complete the task" differs from how much we expect. MTurks often accept multiple tasks and execute them in parallel --- in fact, the best way to get angry emails from Mturkers is to set a very low time to complete task. While I agree with your estimates of reading/writing, I also do not believe that the time to complete metric from AWS is very reliable (largely for the same reasons)...

I disagree we oversold it. We report the exact finding of our paper: we did find that "MTurkers widely use LLMs in a summarization task." We posted a summarization task, and MTurkers used ChatGPT to solve it.

I found you overly optimistic in the following sentence: "The human factor remains intact in crowdsourcing when we align task objectives, participant selection, and study design with the right crowdsourcing platform and methodology." This is an extrapolation — it assumes that, because these strategies worked with spammers, they will work with sophisticated use of LLMs — crowdworkers have a clear short-term incentive to use these tools, and detection is not easy.

We are re-running this study multiple times across platforms and with different settings (including using better-selected samples, better pay, clear instructions not to use LMs, etc.), hopefully addressing many of the limitations you raised.

The dirty little secret of social science research is that participants are like 90% Turkers or college students participating for credit.

GPTurk was inspired by “Message distortion in information cascades” by Manoel Horta Ribeiro, Kristina Gligoric, and Robert West, which looked at how chains of summaries were distorted (i.e. I summarize a text and then you summarize my summary and so on).

The “Artificial” triplet is based on a quote from Jeff Bezos who called MTurk “artificial artificial intelligence,” meaning MTurk seemed to be an AI task agent, but it was really just humans. But if the humans that appeared to be AI are now actually using AI you get “artificial artificial artificial intelligence.” Just wait until ChatGPT start subcontracting work to humans and we can have “artificial artificial artificial artificial intelligence.”

See “Demographics and Dynamics of Mechanical Turk Workers” from Proceedings of WSDM 2018. “By using our data, the Chao approach gives a lower bound of 97,579 workers, which is compatible with our results.” Note that there are surveys with samples that are very small compared to the population, but in order to make statements about the broader populations certain assumptions are needed.

According to Amazon’s MTurk documentation the “Reward” columns is “The US Dollar amount the Requester will pay a Worker for successfully completing the HIT.”

MTurk does allow requestors to pay bonuses so it’s possible every completion received a 10-cent bonus, although that wasn’t specified in the paper.

Using the $0.90 figure along with the 4-minute estimated duration actually gives an implied hourly rate of $13.5/hr not $15/hr.

The data set shows that the “AssignmentDurationInSeconds” column was set to 3600 seconds. According to Amazon’s MTurk documentation, the definition of this field is “The length of time, in seconds, that a Worker has to complete the HIT after accepting it.”

See “Reading faster” from International Journal of English Studies. “What this research shows is in normal skilled reading most words are focused on. Because there are limits on the minimum time needed to focus on a word and on the size and speed of a jump, it is possible to calculate the physiological limit on reading speed where reading involves fixating on most of the words in the text. This is around 300 words per minute.”

Here is one example abstract to be summarized: “First Results of Phase 3 Trial of RTS,S/AS01 Malaria Vaccine in African Children: An ongoing phase 3 study of the efficacy, safety, and immunogenicity of candidate malaria vaccine RTS, S/AS01 is being conducted in seven African countries. From March 2009 through January 2011, we enrolled 15,460 children in two age categories — 6 to 12 weeks of age and 5 to 17 months of age — for vaccination with either RTS, S/AS01 or a non-malaria comparator vaccine. The primary end point of the analysis was vaccine efficacy against clinical malaria during the 12 months after vaccination in the first 6000 children 5 to 17 months of age at enrollment who received all three doses of vaccine according to protocol. After 250 children had an episode of severe malaria, we evaluated vaccine efficacy against severe malaria in both age categories. In the 14 months after the first dose of vaccine, the incidence of first episodes of clinical malaria in the first 6000 children in the older age category was 0.32 episodes per person-year in the RTS, S/AS01 group and 0.55 episodes per person-year in the control group, for an efficacy of 50.4% (95% confidence interval (CI), 45.8 to 54.6) in the intention-to-treat population and 55.8% (97.5% CI, 50.6 to 60.4) in the per-protocol population. Vaccine efficacy against severe malaria was 45.1% (95% CI, 23.8 to 60.5) in the intention-to-treat population and 47.3% (95% CI, 22.4 to 64.2) in the per-protocol population. Vaccine efficacy against severe malaria in the combined age categories was 34.8% (95% CI, 16.2 to 49.2) in the per-protocol population during an average follow-up of 11 months. Serious adverse events occurred with a similar frequency in the two study groups. Among children in the older age category, the rate of generalized convulsive seizures after RTS, S/AS01 vaccination was 1.04 per 1000 doses (95% CI, 0.62 to 1.64). The RTS, S/AS01 vaccine provided protection against both clinical and severe malaria in African children.”

See Figure 2, Panel 1 from “Observations on Typing from 136 Million Keystrokes.”

Technically, this was just the most experienced Turker for the GPTurk as a requestor. That individual had completed 19 previous task for the GPTurk requestor, the next highest was 3.

See “A Data-Driven Analysis of Workers’ Earnings on Amazon Mechanical Turk.” “Our task-level analysis revealed that workers earned a median hourly wage of only ~$2/h, and only 4% earned more than $7.25/h. While the average requester pays more than $11/h, lower-paying requesters post much more work.”

See discussion of Figure 7 in “A Data-Driven Analysis of Workers’ Earnings on Amazon Mechanical Turk.” “Working on a HIT with $1.00 reward should yield $8.84/h.” Using the BLS inflation calculator this works out to about $10.67.

See “A Data-Driven Analysis of Workers’ Earnings on Amazon Mechanical Turk.” ‘Workers seek to find good requesters so they can earn fairer rewards. To do so they use tools such as Turkopticon and information from sites like Turker Nation.”

“Partially replicate” because I think changes are needed to the original study.

Or it might just mean that MTurk becomes unviable for some kinds of research and other platforms take its place.

This criticism misses the mark and seems to come from a place of confirming biases rather than honest interrogation of the data. GPTurk isn't perfect in its methodology, but the findings clearly support the preprint's stated conclusions.

Your interpretation demonstrates a concerning lack of ability to generalize findings:

"the authors' findings suggest that some members of the general population [they only ever claimed it was some] of one crowdsourcing platform (MTurk) [why wouldn't this generalize to other crowd-work platforms?] might be inclined to use ChatGPT [provably do use ChatGPT] for relatively complex text summarization tasks [for their assigned tasks that they elected to perform] when offered average (below average?) pay [when offered market rate pay], in the absence of clear instructions disallowing the use of language models [when using previously successful study protocols]"

I summarize your points (without the use of ChatGPT) below and respond:

1. Small sample

The small n of GPTurk is a drawback for sure, but not for the reason you state. Higher n would be desirable for a more precise estimation of the proportion of workers.

Your issue with the study seems to be that their sample of 44 only includes a tiny slice of Turkers. This ignores the whole idea of sampling. To detect a population-level effect, you can take a small sample, estimate the proportion of that effect, and generalize it to the population. Studies that investigate heart attack interventions that capture 0.04% of the global heart attack population are taken as valid.

2. Clear instructions/study design

The instructions given were typical-use conditions. If anything, the conclusion you could draw from this paper is "Oh, it seems like Turkers frequently use ChatGPT to perform their assigned tasks! It would be wise to include "don't use ChatGPT" in future prompts to discourage their use."

Instead, you bizarrely jump to defend Turkers and claim that only a tiny amount use them, and we don't even really know if they do, and if they do it's because they aren't being paid enough, or because the task was too complex, or because they weren't told they couldn't. It is clear your a priori stance is that Turkers are being maligned by this paper and you are emotionally invented in their defense rather than an objective discussion of the research.

3. Fair pay

Again, the conditions created in GPTurk are the typical-use conditions by social scientists. If anything, the point you're making by saying they should have paid more is that in order to get the same quality of MTurk data as last year, researchers now have to pay more for it. That is a massive finding with huge implications for typical-use MTurk!!

Furthermore, you say workers are "underpaid" based off of what you feel is fair for an hour of labour. What special economic insights are you blessed with that lets you determine fair prices? In this world, we use markets to determine a fair price. Turkers choose tasks to perform based on whether they think it's worth it or not. The very task and price point done in the study are based off of past MTurk studies. Whether you personally would elect to be a Turker at market rates is irrelevant, but this study did not "underpay" them.

In general, the fundamental takeaway from this pre-print is that LLMs are being used by Turkers in typical-use scenarios. This degrades the quality and increases the cost (both in labour and money) of collecting this type of data. Platitudes like "of course MTurk isn't reliable!" and "the onus of good data collection is on the researchers" are irrelevant to the question of whether LLM usage is now prevalent on MTurk (it is) and whether that negatively impacts research (it does).