Majority of Americans support paying creators if their art is used to train AI models

Americans also favor labeling AI-generated content and more transparency around what data AI companies use to train their models.

This article is part of my ongoing coverage of the 2023 Generative AI & American Society Survey, a nationally representative survey of Americans conducted by the National Opinion Research Center (NORC) at the University of Chicago. Known for producing some of the most scientifically rigorous surveys in the United States, NORC was my chosen partner for this project, which I both wrote and funded. For more details on the survey, you can check out the project FAQ.

Americans support paying artists if their work is used in AI model training

The rise of Generative AI has placed creators — including photographers, authors, musicians, and other artists — squarely in the spotlight. New Generative AI systems are trained on large datasets, some of which contain copyrighted material. While many artists argue that such usage infringes on their copyrights, AI companies assert that their actions fall under the umbrella of U.S. fair use laws.1

Recent legal challenges,2 such as comedian Sarah Silverman and a group of authors suing OpenAI and Meta for copyright infringement, highlight this contentious issue. The probable outcome of these lawsuits remain uncertain. In July, a court asked artists in one copyright lawsuit against Midjourney and two other text-to-image platforms to provide more evidence for infringement before the case could move forward.

In exchange for Generative AI companies using copyrighted material during model training, some advocates in the AI community have suggested paying creators for the use of their work.

Setting aside the legal questions about using artists' creations for AI training, the 2023 Generative AI & American Society Survey shows that most Americans are in favor of compensating artists when their work is used in this way (or allowing them to opt-out).

A majority of Americans, 57%, agree with compensation for artists while only 8% of Americans disagree. Just under a quarter of Americans (23%) neither agree nor disagree.

In gauging public sentiment about paying artists or allowing them to opt out, the survey question included both the potential benefits and tradeoffs of such a policy. Americans’ agreement was solicited using the following question wording:

Idea: Artists, authors, and musicians should be paid if AI systems use their works to learn. Or they should have the choice to opt-out.

Benefit: Creators could get recognized and financially rewarded for their work.

Tradeoff: This could limit the diversity of AI training data, affecting the quality of AI content. It might also increase the cost of using AI systems.

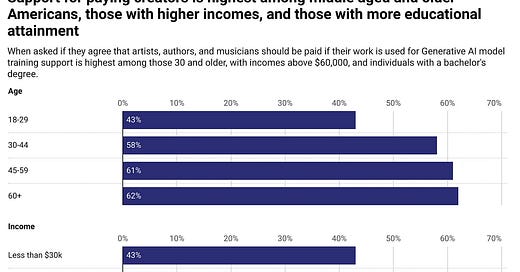

While all demographic groups show at least moderate support, there was variation. Younger Americans and those under 30 were the least likely to support such a proposal as were low-income Americans (those making less than $30,000 per year), and Americans with low educational attainment.

Conversely, support was highest among those 30 and over and for those with incomes above $60,000. Among all demographic groups analyzed, those with a bachelor's degree or higher showed the highest level of support for the policy, at 71%.

Americans also favor labeling AI content and more transparency about what data is used for AI model training

Creators encounter challenges in determining whether and how their works are used for AI training, partly because some companies, such as OpenAI, do not disclose their training data.3 The 2023 Generative AI & American Society Survey indicates that half of Americans think AI companies should be more transparent about how Generative AI models work and what data are used to train them. Another 30% neither agree nor disagree, while only 8% explicitly disagree.

Some Generative AI companies, such as OpenAI, cite strategic and competitive reasons as contributing factors for not disclosing specific details about the training and implementation of resource-intensive projects like GPT-4. The potential anti-competitive nature of revealing training data and model details was accounted for in the survey question wording:

Idea: Companies should tell us how their AI models work and what data they use.

Benefit: This approach could foster trust and allow users to pick the AI systems they feel comfortable using.

Tradeoff: This could give away AI company secrets and let AI creators in other countries get ahead. In addition, knowing they have to disclose details might make companies less eager to innovate.

Labeling AI-generated content has been proposed as a method to counter misinformation, however, it could also give consumers the option to abstain from engaging with content that raises ethical questions for them about the unauthorized use of creators' works. Some major content platforms, such as TikTok, have already begun enforcing such labeling policies. According to TikTok's updated guidelines, any content using AI to create a realistic depiction of a scene must be clearly labeled as AI-generated.

The 2023 Generative AI & American Society Survey found that a significant majority of Americans, 63%, support labeling AI-generated content, while a mere 4% oppose it. Another 23% neither agree nor disagree on the policy.

As I’ve discussed elsewhere, labeling AI content could risk unnecessarily stigmatizing content that is engaging, educational, or otherwise useful. However, this potential tradeoff was explicitly taken into account in the wording of the survey question used to gauge public sentiment.

Idea: Content created by AI should have a clear label saying it's AI-generated. AI-generated content includes images, videos, stories, and music.

Benefit: This could enable users to understand content origins and make informed decisions.

Tradeoff: People might engage with content less if they know it's made by AI even if the content is useful or entertaining. That's because it could seem less genuine or appealing.

How were these figures determined?

Results from the 2023 Generative AI & American Society Survey came from the National Opinion Research Center’s probability-based AmeriSpeak panel of adults ages 18 and over. The sample size was 1,147 and responses were weighted to ensure national representation. The exact questions posed to respondents about creator payment, AI transparency, and AI labeling are shown below. For more information, please refer to the FAQ page.

Paying creators question

The following are some ideas for potential changes to how Generative AI systems and companies operate. Each idea has benefits, but there could also be downsides, or "tradeoffs." We've included potential benefits and tradeoffs for context, but there might be more that we haven’t mentioned.

Indicate how much you agree or disagree with each idea. Keep in mind, your opinion is only about the idea itself. The benefit and tradeoff might help guide your opinion.

Idea: Artists, authors, and musicians should be paid if AI systems use their works to learn. Or they should have the choice to opt-out.

Benefit: Creators could get recognized and financially rewarded for their work.

Tradeoff: This could limit the diversity of AI training data, affecting the quality of AI content. It might also increase the cost of using AI systems.

Strongly agree

Agree

Neither agree nor disagree

Disagree

Strongly disagree

Don’t know

AI-generated content labeling question

The following are some ideas to make AI systems more open and responsible. Each idea has benefits, but there could also be downsides, or "tradeoffs." We've included potential benefits and tradeoffs for context, but there might be more that we haven’t mentioned.

Indicate how much you agree or disagree with each idea. Keep in mind, your opinion is only about the idea itself. The benefit and tradeoff might help guide your opinion.

Idea: Content created by AI should have a clear label saying it's AI-generated. AI-generated content includes images, videos, stories, and music.

Benefit: This could enable users to understand content origins and make informed decisions.

Tradeoff: People might engage with content less if they know it's made by AI even if the content is useful or entertaining. That's because it could seem less genuine or appealing.

Strongly agree

Agree

Neither agree nor disagree

Disagree

Strongly disagree

Don’t know

AI company transparency question

The following are some ideas to make AI systems more open and responsible. Each idea has benefits, but there could also be downsides, or "tradeoffs." We've included potential benefits and tradeoffs for context, but there might be more that we haven’t mentioned.

Indicate how much you agree or disagree with each idea. Keep in mind, your opinion is only about the idea itself. The benefit and tradeoff might help guide your opinion.

Idea: Companies should tell us how their AI models work and what data they use.

Benefit: This approach could foster trust and allow users to pick the AI systems they feel comfortable using.

Tradeoff: This could give away AI company secrets and let AI creators in other countries get ahead. In addition, knowing they have to disclose details might make companies less eager to innovate.

Strongly agree

Agree

Neither agree nor disagree

Disagree

Strongly disagree

Don’t know

If you have additional questions, comments, or suggestions please do leave a comment below or email me at james@96layers.ai. To help advance the understanding of public attitudes about Generative AI I’m making all raw data behind the 2023 Generative AI & American Society Survey available free of charge. Please email me if you’re interested.

For example, see Microsoft’s motion to dismiss the lawsuit against GitHub’s Copilot.

Recent lawsuits brought against AI companies include:

A class-action lawsuit against text-to-image technologies Stability AI (creator of Stable Diffusion) and Midjourney for infringing the rights of artists while scraping images from the web for use in AI model training. This lawsuit is occurring in the United States.

A similar lawsuit from Getty Images, the stock photo company, against Stability AI. This lawsuit is occurring in Britain.

A lawsuit against Microsoft, GitHub, and OpenAI. The lawsuit pertains to GitHub Copilot, a tool that aids in software development by using AI to generate production-quality code. Microsoft owns GitHub, and OpenAI developed the AI model that powers Copilot (OpenAI is an independent research organization that partners with Microsoft). The plaintiffs in the lawsuit allege that their human-written code was used unlawfully during the AI model's training, infringing their copyright protection.

In Section 2 of their “GPT-4 Technical Report” OpenAI notes that, “Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.” Also see this March 2023 article from The Verge, which has additional details including a further explanation from Ilya Sutskever, OpenAI’s chief scientist and co-founder.