Tracing AI Data Origins

A conversation with Shayne Longpre and Robert Mahari of the Data Provenance Initiative

Let's say you're on the edge of developing an awesome new AI language model. But here's a critical question – how do you ensure that your use of training data aligns with its licensing terms? How do you even find out what the licensing terms of that data are? Here’s another question: how do you find out where the dataset came from and what's inside? And how do you prevent the dataset from introducing bias and toxicity into your model?

These are some of the key questions we're discussing in this week’s episode. I spoke with Robert Mahari and Shane Longpre from the Data Provenance Initiative, a research project and online tool that helps researchers, startups, legal scholars, and other interested parties track the lineage of AI fine-tuning datasets. Shane and Robert are both PhD candidates at MIT’s Media Lab, and Robert is also a J.D. candidate at Harvard Law School. We had a fantastic conversation that I can’t wait to share. This transcript has been lightly edit for clarity.

I'm joined today by Robert Maher and Shane Lamprey of the Data Provenance Initiative. Hey guys, how are you doing? Welcome to the podcast.

Shayne:

Great pleasure to be here. Thanks, James.

Robert:

Yeah, thanks for having us, James.

Yeah, thanks for being here, guys. We'll get into all of the details of the initiative in a little bit. But to get started, Shayne, why don't you give us an overview of the project?

Shayne:

Sure. So, the original objective of this project was to try to trace — for many of the popular data sets online for training foundation models or large language models — their provenance. So the sources where they originally came from, where on the web they were scraped, whether machines were involved in generating part of that data, and whether humans annotated that data. And what other curation was involved: what languages is it in and what licenses were attached to that data at different stages of its curation and development into the final datasets that are very popularly used by the public, by startups, and by non-profit and open source corporations. It's essentially a very large, I'm going to say, public audit of data that's popular in the AI space and we wanted to really analyze that full ecosystem and make it easier for developers to trace what data would be most appropriate for their legal and ethical criteria and also for whatever application they're building.

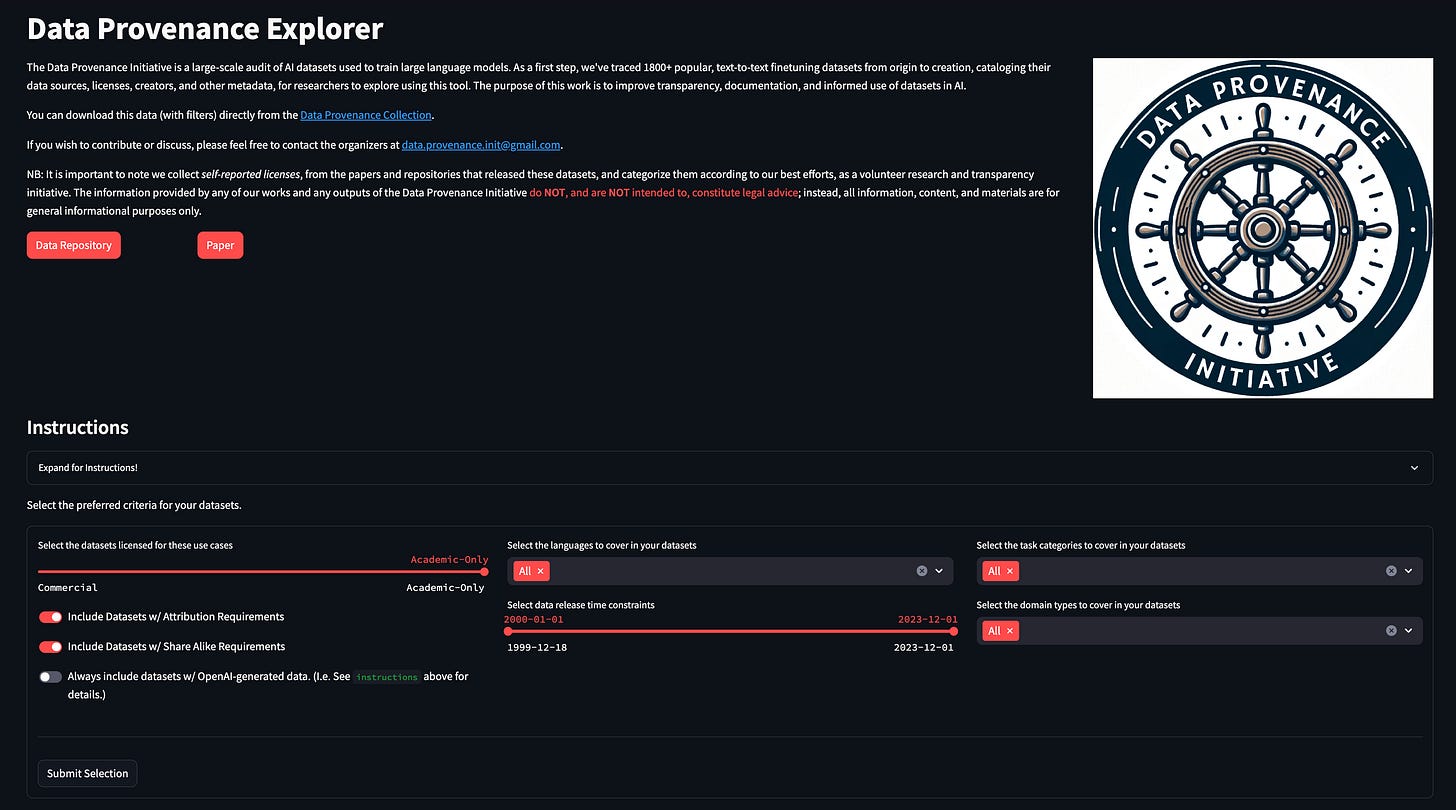

Yeah. And we should probably mention before we continue, there's actually two parts to the initiative. There's the Data Provenance Explorer, which is an online tool for researchers to be able to go and look at the licenses and lineage and all of the other information you just mentioned. And there's also an accompanying research paper that is linked from the online Explorer that has a lot of great information and kind of a breakdown of the project.

One of the things that is in that research paper is a list of problems that can be caused by not having good data provenance and good lineage. I'll just read a few of them here. One, data leakages between training and test data. Two, exposure of personally identifiable information. Three, AI tools that have unintended biases or other kinds of negative behaviors. So let's talk a little bit more about those risks and expand on the risks of not having good data provenance.

Shayne:

Yeah. So maybe a little bit of background is important here. People assume that, you know, if you trained a large language model on data that you found and put together that you know what's in it, you understand the data — which is the key ingredient, by the way, into these models and their resulting behavior and what they can and can't do. But actually the trend in the field is that people will not just take one dataset, they'll take massive collections of datasets, which themselves are derived from other subsets of datasets.

So in the paper we talk about this example of the SQuAD dataset, a very popular 2015 or 2016 dataset for question answering that was used by researchers. Passages were scraped from Wikipedia, and then human annotators went and wrote questions that were answered in the passage. So the model would be given a question and learn to figure out what the answer is from the passage. So Wikipedia is the original source with human annotators, but after that it was reformatted in various ways and packaged into a competition called MRQA. And that was later packaged into this large collection of datasets called UnifiedQA. And then that was later put into a PromptSource, which was then collected and re-licensed and put into the Flan Collection, which is now thousands of datasets packaged together were the original sources, the original qualities and characteristics of the data are all sort of blurred together, because we don't really know what was in all these thousands of datasets in a detailed way.

And so to answer your question, that causes problems. Researchers might not remember or know specifically if in one or two of those datasets there were examples that are the same examples that are going to be in their test set, meaning that they could evaluate a model and say, “Wow, it did really good.” But actually it might have trained or seen the exact examples that they're testing on, and therefore it's not really a fair evaluation of its abilities. There could be bias and toxicity in that dataset that they didn't find. There could be privacy leakage. There could be languages or modalities they didn't expect.

All sorts of things can happen that skew our abilities to really understand the behavior of the model because of the lack of documentation and transparency around these datasets. And so the goal of our project is to structure from those initial datasets, their properties, qualities, provenance licenses, so that when you collect a massive composition of these datasets, you can infer — because it's composable — that the resulting composition has a certain percentage of data that's English or Spanish or French or code. It has all of these associated licenses with links that you can see. So you can get some sense of how the datasets interoperate or how they might interoperate and also what original sources were in the data that you might have forgotten about or lost along the way.

I want to talk more in a moment about training and how that works, and kind of differentiate pre-training and fine-tuning to help give the listeners a little bit of a better sense about the initiative and its importance, and where it fits in the ecosystem. I did want to ask, though, before we continue, Robert, there are 17 authors on the main paper from from all over. There's some from industry, there's some from academia. How did you all find each other, and how did this program or this initiative kind of come about?

Robert:

So this is really something where I need to credit Shayne. He has an incredible knack for getting people together and pulling in the same kind of direction. So, you know, at a high level the topics that we tackled here are kind of policy, legal topics in some way, but they're tackled in a very computer sciencey way. And we were able to bring people together from both kind of sides. So we have people from the machine learning community and we have people from the legal community, kind of these experts who helped guide the research. And then we had a number of people who just did an incredible amount of work. I mean, you have to realize that when you have 1,800 data sets you know, different sources spread across the web, different aggregators, dozens and dozens and dozens of papers that outline what's in the datasets, what kind of license is in there, and so on, that that was a huge amount of work.

So, we we kind of devised — starting not that long ago, I think, like, April or May of 2023 — Shane kind of realized that this was a big issue and started bringing these people together. I kind of joined early on as the legal person. And then over the summer this group kind of came together was able to assemble this effort. And since then, actually, the team has grown even more. We partnered with a clinic at Boston University Law School — students supervised by a professor — and they helped us take the work that we did in Data Provenance and package it for the U.S. Copyright Office as kind of a legal comment on on how to think about the differences between pre-training data and fine-tuning data and some of the legal implications.

And now we're launching phase two of this project, where we're hoping to extend beyond fine-tuning data to pre-training data, thinking about some more meta questions like, “Should we really have a standard for data provenance?” “What would that look like?” And also exploring some of the follow-on research questions. If you play around with the Data Provenance Explorer, you'll see quickly there's a limited number of countries that are really contributing to the creation of datasets. And similarly, the kinds of languages that these datasets are in is also somewhat limited. And so we're doing follow-on work around Western centricity and biases and things like that that are encoded in these datasets in interesting ways. So yeah, lots of work and many hands make light work.

And did you and Shayne know each other before this?

Robert:

Yeah.

And how was that? Did you go to school together or grow up together?

Robert:

So Shayne and I…So I've been a PhD at the MIT Media Lab — I'm doing a J.D.-PhD, so kind of a combo degree — and I've been a PhD at the Media Lab for, like, four-ish years now. And Shayne had reached out — again, his knack for for networking — before joining. And we did some joint projects related to computational law and then Shayne joined the Media Lab as a PhD student. So now we're both in the same program. But I think this is the first — tell me if I'm wrong Shayne — the first, like, real kind of academic collaboration that we've done together.

Shayne:

So, yeah, we were ardent rivals, but it was clear we needed some legal jurisprudence expertise to make this project work and Rob really drove that effort.

Nice. Okay. One last question on the project overall: the the logo for the initiative is a ship's wheel. Is there any significance to that?

Shayne:

Sure I can take that. One of the collaborators just went and created it from one of the many sets of software where you can create images now and went in Photoshop and edited it. I think the idea is that there's a stormy ocean that people are trying to navigate with respect to data and align their ships and get through the storm. And this Data Provenance Explorer is sort of like a map that allows you to to erect the right course. And get the right data to train the right models responsibly. So something along that analogy that we need to articulate better.

Nice. No, I love it. That's awesome. Let's move on and talk a little bit about how these large language models are trained to give listeners who aren't as familiar with the training process a little bit of an overview. Generally speaking, there are two phases to model training. There's the pre-training stage, which uses a ton of data. We're talking like significant portions of the internet. And this is really about giving the model a kind of a base set of knowledge. And then after that, there's a second phase called fine-tuning. It also uses a lot of data, less than pre-training, but still a lot of data by most standards. And this is really about honing the models to make them more useful. Can you draw out that distinction a little more between pre-training and fine-tuning, and particularly talk about the importance of fine-tuning for the in behavior of a language model like ChatGPT or a similar tool?

Robert:

Shayne, why don't you take this and then I can talk about some of the legal implications of what you're about to say.

Shayne:

That'd be perfect. Yeah, I can start. So for listeners that aren't familiar with the pre-training vs. fine-tuning paradigm, pre-training is where you imbue the model with all of this amazing world knowledge, and it begins to “understand” the structure of language, syntax, grammar, and semantics so that it can really just generate more text in a way akin to what a human does. And fine-tuning takes that world knowledge and actually makes it helpful for user interaction and makes it less harmful.

I'm talking about instruction fine-tuning or alignment fine-tuning, as some people might have heard. OpenAI calls it post training. Now, I like to give this example: if you asked a model that was pre-trained, but not fine-tuned, “What is the capital of France?” it could give you the answer, but it might give you a really long-winded history because it thinks it's writing a Wikipedia page. It might also think it's writing a quiz and just produce tokens like (A) London (B) Paris (C) Berlin. Not answer the question, then write a new question: “What is the capital of Japan?” And then keep going.

What instruction or alignment fine-tuning does is it helps orient the model so that it will produce an answer that we would expect that's actually helpful, useful, and not harmful. Like many of the things that you might find on the web. You know, it's not going to say something rude or not safe for work or offensive. And we wanted to focus on those parts first. But now the Initiative is starting to think more about the pre-training data, which is all the rest of the web that's scrapeable, in our next phase. But Rob can maybe tell you a bit why we started with fine-tuning.

Robert:

Yeah. So I mean, fine-tuning, if you take a little bit of a step back, on the one hand, has enabled a lot of the recent breakthroughs in Generative AI, right. Like actually making these systems usable and not just systems that know a lot about patterns in language, but might not be that useful from a user perspective. So, at the same time, these fine-tuning datasets and instruction-tuning datasets and all the others have kind of gone unnoticed a little bit by the legal community because the thing that most people are familiar with is this idea that there's all these people who've contributed — you know, written content and images to the internet that's now been scraped without their consent — and that's being used to train Generative AI models. And that's true.

But on top of that, you have this huge community of researchers who are creating fine-tuning and other kind of supervised, highly curated datasets that exist for the sole purpose of training AI models and putting those onto the web as part of the AI research community. And those datasets are then used to create commercial models. And this raises a number of challenges about where does the data come from? Is it actually data that's intended to be used commercially in this way? Do the dataset creators actually agree with this kind of use? Does this kind of use undermine the incentives to continue to create datasets? And so on and so forth.

But there's also this big legal distinction where, at the end of the day, when I create an image, that has an artistic purpose, or I create an article that has an informational purpose, that purpose is very different than training an AI model on on the article or on the image. By contrast, if I have a highly curated fine-tuning dataset that content exists for the sole purpose of training machine learning models. Now it's a little bit complicated because as Shayne outlined with the SQuAD dataset, you have underlying content, right? So you have some Wikipedia articles that were then used to generate these expert annotations that exist to train AI models. But we argue that this additional expressive content has a very different legal status. Specifically, there's this principle of fair use, that is used to justify the use of articles and texts and images on the web for training AI. And it seems like fair use might not actually apply in a context where content is created to train an AI model. And if fair use doesn't apply, then you kind of open up all these interesting additional research questions and uncertainties. So, for some of those reasons, we started with fine-tuning datasets. But like I said at the beginning, we're now starting to think about broadening that scope again and including other data sets.

Yeah. I want to talk more about the legal aspects of these datasets, because I found that part one of the most fascinating pieces of the entire Initiative. Just to stick with training for a moment, The Data Provenance Initiative currently focuses not just on fine-tuning datasets, but on language fine-tuning datasets specifically. And there are obviously other kinds of datasets, for example, datasets of images that are used to train text-to-image systems. So Midjourney and Dall-E are two popular image systems listeners might have heard of. They use these image datasets. And there are other kinds of datasets, you know, datasets used to train AI speech systems. But talk a little bit about the importance of the initial focus on language datasets and why the Initiative decided to start there.

Shayne:

I think that times are quickly changing, but ChatGPT was sort of the first hotbed — not the first, but one of the hotbeds — of this research and where people have been focusing, first. And so there are many, many text datasets in the community, a lot of very rich variety, and a little bit less so in the vision community, although it's very rich still and less so in the speech community, again. But, a lot of the initial training — because of a number of reasons, I think compute constraints, availability of text — have started first in the text community. And so that's where we think most of the help was needed.

Last question on training before we move on to licensing and the other legal aspects of these datasets. I was curious, how are researchers actually finding these fine-tuning datasets today? So let's imagine a scenario. I'm a researcher, I'm creating a new AI language model, the next version of GPT or whatever. I've done the pre-training phase. Now I want to do fine-tuning to make the model as useful as possible. Where do I actually find these fine-tuning datasets? Am I creating one myself? Am I going to go buy one from a company? Am I just searching the internet, hoping I can find one lying around in an online data repository? How does that actually work in practice?

Shayne:

I'm very glad you asked this question. It's chaos out there. It's happening so quickly. A lot of the best and most popular datasets, they've come out in the last two or three years, if not more recently, for instruction or alignment tuning, at least that are publicly available, not proprietary.

And so people either know about them because they are keeping close tabs on what's going on and they're in the community or they use Hugging Face datasets, which is this huge platform where people can find a lot of different datasets that people upload right after they create a new dataset. But the issue is that it's very hard sometimes to find what you want on Hugging Face because it is crowdsourced. We found — even though we love Hugging Face, they do a phenomenal job at creating a platform for everybody to share resources — most people don't document the work with data cards or model cards or list what datasets were trained on or much about the provenance of their dataset.

We found about 65% of the licenses were incorrect on that platform, because people upload other people's datasets and they'll just make up a license, or they'll copy the license for code rather than for data. So it's currently very chaotic and there's not a good way to do it. And as a result, there's two things: (1) they won't find datasets that are very applicable to them, or they won't use them because they're not sure about the license, (2) but also they will end up using data sets that aren't applicable for them. So you see a lot of both of that happening.

Let's move on and talk about licenses and the other legal aspects of these data sets. Robert, to get us started, give us an overview of licenses and how they relate to copyright law, and maybe also provide a couple of real world examples of data licenses that listeners might have encountered in their everyday life.

Robert:

Totally. So copyright is this kind of bizarre thing in the United States because it arises the moment you create something. So when you create a dataset or a piece of writing or whatever, you have a copyright to that piece of work. And that expressive content is covered by your copyright. In general, copyright arises automatically, and it gives you this kind of exclusive right to make copies and to do certain other things with your work.

Now, the way that you use a license, especially in the context of an open source community where you're putting this work out into the community, is that's how you tell people, “You can use my work, and these are the conditions.” There have been lots of interesting kind of licensing eras that were pushed by the open source movement. So there was this idea of “copyleft” where the idea was, “You can use this content however you like, but you have to license any follow-on content under the same terms.” And that way you keep the community open. You make sure that people don't just take someone else's work, or the open source community's work, and make it closed source.

You'd be familiar maybe with cc-by or cc-by-sa. So that's these Creative Commons licenses. There are a couple others; like, there's the MIT license, the Apache license. So you come across these when you're on the web sometimes. These license agreements are often templates that people use and they often cover a wide range of things. So we have actually seen some new licenses emerge around responsible usage of AI and stuff like that.

But in general, we looked at three kind of key properties of these license agreements. We looked at what kind of usage they allowed. So do they allow commercial usage of the licensed thing? Do they allow non-commercial usage? Or is it research only?

Then we looked at attribution. So do you have to attribute where you got the work from? And in many cases you don't and in some cases you do. And when you do, it kind of raises an interesting question about how you do attribution when you have potentially thousands of datasets that you're using.

And then finally, uh, this question about sharing alike, so does derivative work have to be shared under the same license or at least a compatible license as the works that you're using as inputs. And there the key question is, “Is an AI model that you train on some data a derivative work, for the purposes of copyright, or is it something different?”

And we tried to tease this out in the paper that we wrote, but we're kind of at the limits of copyright in some ways. Like, a lot of this law is undecided. A lot of these laws are ambiguous. We're focused on the U.S. context. Things get even more complicated when you kind of go beyond the U.S. So, that was, I think, more than you asked for, but but hopefully interesting to to the listeners.

No, that was great, great context. So one question there. If I'm a researcher, what happens if I end up violating one of the licenses associated with these datasets?

Robert:

So, that's a good question and in many cases, the answer is that not a lot is going to happen. But essentially the creator of that work could sue you, right? Could say, “You've infringed on my copyright,” and there could be a lawsuit. And, we're seeing this happening right now, less so for fine-tuning datasets, but more so for pre-training datasets. People who've created art say, “Hey, these works were used without my permission or in violation of the kind of license agreement that I put on them to train Generative AI. And that's not okay.” So that's kind of the legal liability. And then there are secondary effects, right? Like, maybe people would be less willing to put their data on the internet and share it openly when they know that licenses aren't being respected and things like that.

And one of the interesting findings from the paper is just how complex these data licensing regimes can get. I'll just give a little bit of background here. So you all present a data taxonomy, and it works like this: There are data sources; multiple data sources create a dataset; multiple datasets roll up into a data collection.

And just to give one example from the paper, the xP3x data collection, you might have multiple data sources. So this could be Amazon reviews, IMDb reviews, Yelp reviews, and so on. These roll up into the Sentiment dataset, and there are other data sets in this data collection, Sentiment is one. There's a Translation dataset. There's a Sentence Completion dataset. And all of these datasets, again, roll up into the xP3x data collection. And at each stage the data source, the dataset, and the data collection there could be licenses applied, sometimes conflicting licenses.

So who has the responsibility for sorting this out? Talk a little bit about the complexity there, what you found, and talk about — is it the data aggregators that have the responsibility when they create these data collections for ensuring that all of the licenses are kind of sorted out before the data is used for fine tuning? Or who has that responsibility?

Robert:

So one of the things that we found that was kind of unfortunate, and to some degree shocking, is that there's simply a lot of mismatch between the licenses, according to various aggregators and according to the original authors. And we see that in a number of cases licenses are actually reported as being less restrictive than what the original authors actually said.

So, if there are responsibilities for aggregators those responsibilities should probably be limited to veracity, truthfulness, ideally having clear provenance. And what we're hoping to do is to make that easier because it's a lot of work. And these aggregators are filling an important role in the AI ecosystem by making these datasets available for folks and for researchers and for startups and for all sorts of people. I think it would be wrong to place the burden too heavily on the aggregators. But ideally, what you would want is to have accurate provenance information. And we're doing a lot of thinking around how you can ensure that provenance information is accurate and that you have almost provenance of provenance, right? That this was the actual license that the person used. And then you can trace that through the sources and the collections and things like that. I don't know, Shayne, if you have anything to add.

Shayne:

I'd add one thing to what Robert said, which is that we provide a tool to give symbolic attribution. So if you use our tool, you select which data sets you want based off of any licensing language task, topic, or criteria. We then produce a CSV, or Readme table, or anything you want that has all the metadata for all the datasets you want in a way that you could just structurally go through and look at the license links and stuff like that. By human or machine.

And this tool is the Data Provenance Explorer that we mentioned earlier. And it's basically a resource for researchers to be able to come and search for data sources, datasets, data collections, and see the full lineage and provenance so they can, number one, ensure they're using the data ethically and legally. And, I guess, number two, ensure they understand what's in the data so they can more effectively fine-tune their models. Is that the right summary? Is there anything you want to add there?

Shayne:

I'd add one thing to that. We believe we're actually the largest text fine-tuning audit that's ever been done for AI and also license audit that's been done in AI. And Lewis, who's one of our advisors, is sort of a legal scholar and open source scholar, and he uses this when he's talking to lawyers and legal scholars about how these software licenses have been adopted in the AI landscape. So, like, an ecosystem view or supply chain view of what's happening is useful to legal scholars as well.

And just in general, for people that want to understand how Western centric AI data is. Or to create language or task distributions, or create distributions of creators by country, or by academia, or industry. So we think it's also a useful tool just for social scientists to understand the evolution of the field.

Yeah. The Western centricity of these datasets, I think was an important finding from the paper. It reminds me, my first podcast ever was actually with Professor Kutoma Wakunuma, who co-edited a book on responsible AI in Africa.

And this was a theme of the book, the fact that there were not many datasets that were relevant to African researchers and African problems. So datasets for agriculture, or health care, or languages even. There's, like, 1500 to 2000 languages in Africa and many of those are uncovered. I know that the paper is new and the research project is still ongoing. Is there anything you can say or want to say on on the Western centricity piece?

Shayne:

Yeah. We don't have too much to say yet other than it's extremely Western centric. It's very, very concentrated in the U.S. and Europe. The language distribution is very much English, with a bit of Spanish, French, German, Chinese. And then there's a long tail; there are hundreds of languages represented, but often there's one or two specialty datasets for translation in the community for rare languages; Swahili is the example people like to use of lower resource languages.

And then we love to show that diagram of the heat map of where all the languages that are represented. And then we show that in contrast to the heat map of the world globe of where the creators of the datasets — the people that package this data — where they're from, where their organizations are from. And that's way starker. There's very little to zero representation in the Global South. And it's incredibly U.S. centric with a little bit of China and Western Europe and Canada.

And what countries are these data sets coming from and what's kind of the cultural representation?

Shayne:

I would say that the datasets are scraped often from Wikipedia, The New York Times, Reddit, social media, like all these different places on the web, you know, even exam websites and Quora, Answers.com, stuff like that. Those themselves are very Western centric in that they come from mainly Western countries, but they're in English and lots of countries speak English, including in Africa and elsewhere.

And so non-Western countries are kind of represented, but it's not really culturally there. You know, they're for the most part covering the Super Bowl and American centric things. But then the creators themselves are even more concentrated in the US and China and a couple of other countries.

Yeah. And does the fact that these fine-tuning datasets are so Western centric have any implications for the end user experience? Like, if I'm using one of these AI language tools, ChatGPT, Bing Chat Bar, what have you, what might I experience as the end user that would otherwise be different if the fine-tuning datasets were more diverse? Or is or does pre-training matter more than fine-tuning in that respect?

Shayne:

The pre-training matters a little bit more in diversity, because that's where you lay the foundation of knowledge and understanding of different languages to a larger degree. But, they will both likely have a strong impact on cultural representation.

So we actually taught a class last year at MIT, and it was about an intro to using these language models just after ChatGPT came out. And one of our students, she was from Nigeria, and her experience with ChatGPT was markedly different from other students. You know, she was asking just general questions about her culture, her country, stuff like that. And she said it was just hallucinating fake information constantly, not just didn't know, but it was just absolutely off the wall. And so she did not have nearly the same experience. But if we want to replicate that experience for the rest of the world and not just in the US, but in other English speaking countries and other countries outside of English, there's so much work to be done. This technology hasn't hit its peak yet.

Let's close by talking a little bit about how the Data Provenance Explorer and the paper and research project overall has been received. What are your thoughts there and what kind of feedback have you been getting from folks?

Robert:

I mean, from my perspective, it's been a little bit overwhelming, but very positive. So, this is the kind of work that I think a lot of people feel that should be done, but it took a lot of resources to actually do. And so people are grateful that it's been done. And we've gotten a lot of “yes, and” — you know, people saying, “Oh, have you considered adding this and that?” And we always say, “Yeah, help us do it.” We'd love to.

We had some coverage by The Washington Post, which I think was important just in kind of communicating some of the — especially based on the discussion you and Shayne just had — the more tangible aspects of things like Western centricity or other kinds of biases that would be uncovered via provenance. So, it's been useful in that way.

And then from the legal community, this kind of argument that fair use might not apply to all training data hasn't really bubbled up that much. Generally people say, well, “You're using stuff that wasn't intended to be used in that way to train AI that sounds like fair use.” And there are obviously limits to that. But not that many people have pointed out and said, “Hey, actually, AI is trained on a bunch of work that was only created to train AI and that that changes things.”

So from different communities, I think we've gotten good, positive reception. And, you know, for anyone listening who'd like to help out we're quite excited about growing this network of collaborators and diversifying the different kinds of information that we include and different things we investigate. So, we're very open in that way.

Shayne:

I'd add that we got tens of thousands of visits the first week that we launched the Explorer tool. We know that a lot of startups, although secretly, are using the tool to help them navigate their risk. One of them has been publicly acknowledged as Stability AI, but there are others.

But a lot of people also are content at this stage to just download whatever's available on Hugging Face, because it only becomes a problem if you actually get big and someone actually wants to sue you or care about your project that’s being used by lots of people. So some people find a way to deal with the lawsuits until they've actually made it.

Well, I applaud your effort and the effort of your collaborators, so I'm glad the reception has been so positive. I think it's a great project, and I'll put a link to the Data Provenance Explorer in the show notes. But for those who are interested, the website is dataprovenance.org. I encourage listeners, even if you're not researchers, to go and play around. I had a lot of fun just kind of searching for different datasets and looking at the lineage. Let's end by talking about the future goals of the project. Tell us what's next for the Initiative and what you're excited about.

Robert:

I can start with some of the legal analysis. We're scratching the surface in terms of pre-training, in terms of fine-tuning datasets and, this fair use analysis. It's all been very U.S.-centric and we'd really like to branch out and especially in light of the EU AI Act, there's some interesting interactions there. And, there's always going to be a challenge about the fact that AI in some ways is fundamentally a global kind of endeavor. But then it's going to be deployed in specific jurisdictions. And so there are interesting questions raised there. Getting some insight on the legal implications around the world is important.

Then expanding to pre-training datasets. And, the bigger vision, at least from my perspective, is that we want to have a regulatory framework that understands the actual process of AI. Like, understands the community, understands the research, and is based on a realistic understanding. And also that encourages responsible AI. That encourages the safe deployment of these tools, the kind of inclusive design of these tools and systems. And so for me, the Data Provenance Initiative has been an opportunity to kind of bridge the gap between regulators and machine learning engineers. So, that's what I'm really excited about. Shayne, I'm sure you're excited about some stuff, too.

Shayne:

Robert said it's super. But to add to that, practically, we're building out to include thousands of more datasets. Just in the two months since we've released it, there have been new datasets that have become really popular and they're going to continue to be. And so we're identifying those.

We are partnering with folks who've worked more on African language datasets, Arabic datasets, Southeast Asian datasets, and the Aya initiative from Cohere for AI, which is a huge, multilingual and highly diverse dataset collection initiative.

We're also likely to expand into speech and visual modalities, looking at pre-training data, and then continue on to a wider scale audit and analysis of that ecosystem.

In general, one thing that I want to add on the the legal license regulatory front is that people right now are often thinking about a binary decision about data that it’s either usable or it isn't. Data that creators have opted-in or opted-out to saying you can or can't use it. Or that the license is commercial or non-commercial. But really across jurisdictions and across all the rules and applications and different ways you can use this data there's a spectrum of indicators that dictate how a dataset should and shouldn't be used. And so we don't want to advocate for one policy or one buyer decision. We want to just create the structured landscape for people to apply their decisions however, the policy, the legislation, the law and the ethical norms evolve. That's what we're pushing to do.

Robert McGarry and Shane Long, thanks for being on the podcast.

Robert:

Awesome. Thanks so much, James.

Shayne:

Thank you.