AI-inflicted harms: Can insurance fill the gaps?

A conversation with Professor Anat Lior from Drexel University’s Kline School of Law

If you follow AI you’ve probably heard about the growing volume of proposed AI legislation in the U.S. and beyond as well as the increasing number of AI-related cases being brought before the courts. Today’s guest argues there is another industry that is a key in handling AI-inflicted harms. Everyone’s favorite, the insurance industry!

Anat Lior is a professor at Drexel University’s Kline School of Law and has written broadly about the intersection of insurance and emerging technologies. In our conversation today we’ll be focusing largely on her paper which appeared in the Harvard Journal of Law and Technology called “Insuring AI: The role of insurance in artificial intelligence regulation.”

We discuss insurance’s role in society and its intersection with emerging technology, how insurance can supplement the courts and government regulation, and end with a discussion about specific insurance proposals related to autonomous vehicles. I thought it was a fascinating conversation and I think you’ll enjoy it.

This transcript has been edited for clarity.

Professor Anat Lior, welcome to the podcast. Thanks for being here.

Hi. Thank you so much for having me. It's a pleasure to be here.

When most people think about insurance companies, they think of them as either boring or as evil. So help get us excited about insurance and the function it plays in society.

Yes, thank you. That's a great intro for me, talking about insurance. Usually when I go to my students or talk in front of an audience, I ask them to give you the benefit of the doubt and don't run away just because I said the word “insurance.” I know a lot of us likely have a very negative context of insurance in our lives, specifically health insurance and the way that we dislike how it works.

That is the broken American system, I would say, as someone who's a foreigner. But there are a lot of opportunities, a lot of benefits, a lot of good things that insurance companies have the potential to do. They don't always do it, but they have the inherent potential in that industry to actually benefit us as consumers, as a society.

If there is any technology that we are worried about, but still want to make sure that it is implemented in a safe way, assimilated into our life in a way that balances out the safety mechanisms as well as the risk involved, that's what insurance has been doing for years. We've seen this since the industrial revolution and the automobile and hot air balloons and airplanes.

I mean, the first people who go on a plane were probably crazy and would not get insurance coverage. But as the technology matures — and we will see the same with AI, I think — there is a shift that insurance goes deeper into the thick and offers policies and coverage in a way that enables us to use it, knowing that there are always risks, but at least we are covered if something bad happens.

So with liability insurance, which we all know from cars, and we're going to talk about that, I assume a lot, is something that has the ability to nudge our behavior to be in a safer manner. And we can feel it as we drive our car. And there are more technologies that are using it right now, and I think it can do the same when we talk about a safer implementation for the future of AI being as it becomes an integral part of our commercial lives.

Do you have a favorite example of insurance helping to usher in a new technology?

I do, steam boilers, which is kind of a boring example, but stay with me.

So steam boilers during the industrial revolution exploded a lot in the beginning, and a lot of people died as a result in the UK and the US and specific insurance companies were created to solve those problems. And engineers got into the pictures, and they thought of ways to make the steam boilers a lot safer. So, in that sense, we have what I call an alignment of interest.

Insurance companies want to make sure that we don't hurt ourselves, because if we do hurt ourselves, they pay us money, they reimburse us, they pay compensation, and they don't want to pay a lot of money. And we take the insurance coverage itself to protect ourselves. Most people are risk averse, whether it's mandatory or just common sense.

So when those interests align, insurance companies have a lot of incentives to make sure that we know what to do to act safe. And they bring out other partnerships, such as engineers in the context of steam boilers, to make sure that it is safer than it was before. We can also see this with fire insurance, sprinklers systems, alarm system, things that did not exist before.

And if you needed insurance, they will say, “Yes, I will provide you with the coverage, I will give you a policy, but you need to do these things to be safer, to make sure that the activity itself is not as risky.” And then the premium goes down, insurance companies make more money and we get injured less.

The legend says that it also happened with automobile industry when the insurance companies pushed for the regulatory implementation of seatbelts and airbags, which were not obligatory before. And as I said, they have the incentive to make sure that even if an accident happened, the safety mechanisms in place will mitigate the damages in a way that they will pay less. I say “legend has it,” because there are a lot of books and scholarly works claiming insurance companies pushed for seatbelt and airbag regulation, but a lot of people are starting to push back against that notion recently.

So I think these examples are ways that insurance companies can help us. They just need the right incentives to do it.

And real quick, steam boilers were used for what?

Heating or electricity or heating in the beginning of the industrial revolution and trains when they emerged, and everything connected to factories and implementing big machinery.

You talk a lot in your paper about how government regulation and the law and insurance all kind of work together and complement each other. And I think it's maybe not something that we typically think about. We might think, you know, “Hey, there's an accident, I'll sue someone” or “There's an accident, the government should put in some kind of new regulation.”

So what's wrong with just using courts and tort law and government regulation to handle new technologies? How does insurance support those two systems?

That's a great question, because in a later article, I talk about like the innovative cycle between insurance companies and new technologies and torts and the government. The government is always in the background, there's no way to take it out of the equation.

So when we talk about new innovation — and I mentioned a couple of examples, but right now, AI, and I think in the future, quantum will be the next big thing — the people who are making the legislation, making laws and acts, have no expertise in the subject matter. We can see it from Senate hearings about Facebook in the past and AI in the present. They don't understand technology.

I think most people don't understand the technology. So creating regulation to solve technology we don't completely understand will probably be meaningless or counterproductive, in a lot of ways. We see this with other technologies as well. By the time the legislation is out there, it's already not relevant.

We can see this with Generative AI. Until ChatGPT happened, AI was considered mostly like drones and robots, and then large language models happened, and the regulation that everyone talked about was irrelevant because it didn't really think about that possibility of language models. And we don't really know in what other directions AI will evolve.

So creating a strict regulation in that sense might be problematic. We see the EU doing this, we see China doing this in Canada. I'm not saying it's the wrong approach.

I'm just saying that the government sometimes lacks the expertise in a way that can create a law that is kind of counterproductive, especially when we're worried about stifling innovation, which is a very American thing to worry about in the tech race against China and other countries. So a lot of people are afraid of creating specific regulation. The tort system and the court system is very important.

I teach torts. I think it's a very strong, very well-founded system that obviously we all need. But when it comes to technologies, and there are a couple of articles talking about this, that even judges have no idea what they're seeing, what they're doing, and they can create precedents that will later on be just irrelevant.

And they don't have the flexibility the insurance companies have to change it fast. We need to have an accident. Someone has to have the means and the capability and the strength and the power to actually go to the court system, which can take a lot of time.

And I haven't seen any AI harms inflicted damages going to the court system yet, which I think is a result of the fact that we have big companies behind these damages and they are usually just settling outside of court. So until we'll have like a rule of law from the court system. It should and probably will take a lot of time because someone really has to have an incentive to go through the system and reach it to the other side with an actual rule that states it.

And even if that rule will be ‘A,’ and eventually AI will completely change course and we want to shift to ‘Rule B,’ it can take another couple of years until the court system will think about it. And the insurance industry in that sense, has a contract that they renew yearly. So they have that flexibility to make sure that if something happens it’s possible to react fairly quickly, and we can see that AI can shift course rather quickly.

And that's the fear and what everyone's thinking about when they talk about risk, the insurance companies can implement changes much faster than the court system can. The problem is, as I said, insurance is portrayed as the bad guy because it has the loopholes to be the bad guy. It can deny claims, it can just say, I'm taking your premiums, and I have all these exclusions. And there are caps and copays and limitations which we all know and hate from health insurance, as well as a little bit from the automobile industry.

So keeping insurance companies in charge of everything will be wrong, but making sure that they have some sort of role to play to help fill the regulatory void and vacuum is important. Legislators simply don't know what they want to do and if they do know, the end result might be very problematic.

So having all three systems cooperate — courts, regulators, and insurance companies — I think will be the best course of action, especially with the regulatory system making sure that the insurance industry has some sort of framework to use. Again consider the steam boiler, if I go back to this seemingly boring example, there was regulation that created a framework saying that insurance company can create specific products to cover steam boilers. We can also see this with cyber insurance. The New York framework legislation is creating some sort of an infrastructure to make sure companies have the ability, have the incentive to offer these types of policies.

We also saw this with terrorism insurance right after 9/11, when no one wanted to offer policies like that anymore because they paid a lot of money after 9/11 and there was a lot of unpredictability. And I talked a little bit about this in the article, the idea of known unknowns. Eventually insurance and the government came into the play and created the TRIA act, the Terrorism Risk Insurance Act, and gave an incentive to insurance companies to offer policies again. So government has a very strong part in this, making sure that insurance companies can do it and do it right.

But when it comes to actually substantively legislating something. I'm not sure if we want them to do it yet.

Yeah, to double click into what you were just saying and kind of add some structure.

In your article you list several advantages of a system that involves insurance can have over a purely tort-driven system. So I'll tell you the notes that I have and you can add anything. So the first advantage you called out is that insurance companies can operate ex ante.

So unlike courts, who have to wait for a decision to be brought before they can rule, insurance companies can offer a policy basically anytime they want. Is there anything you want to add to that advantage?

I would say that that comes as a double-edged sword, because sometimes there's something that they are so afraid of that they will not offer anything. Although history shows us that eventually they do.

We are seeing COVID and pandemic related policies, flood, terrorism, protests, police related coverage. So insurance companies will offer unique products based on what is happening in the world. Usually in the beginning the premiums will be insane and there will be a lot of exclusions and the caps will be very low.

So the first people who will pay for coverage will probably — it will not be worth it for them. But as more information is gathered and insurance companies have more understanding about the predictability and the scope of the potential risks, they get better. And insurance companies are reacting as everything is happening and no one has to come to them for them to react, like the court system. So technically they are situated in a position to get all the information they need because they are monitoring risk and AI is just connected with everything right now.

So if there's like a medical malpractice with AI involved, or like we see a lot of lawyers submitting stupid things that they used AI for and they have like a malpractice liability in place. So insurance companies are already seeing all the effects of AI via their traditional policies and they gather information, they know what’s happening and they are better able to eventually cover it in a more beneficial way to both sides.

And the next item I have here in my notes is insurance is better able to handle atypical claims early because unlike courts, insurance companies are not bound by judicial precedent.

Yeah. So they have the ability to look at something and say that they made a mistake in the previous case and just completely shift their direction. I mean, there is an issue obviously with certainty, and we as a system, even if it's not the court system but the insurance system, we want to make sure that policyholders know what they're going to be liable for or obligated for.

But even if insurance companies have to wait to change the policy until the next term it will be faster than what the court system can do.

Another thing you point out is that insurance companies just have a lot of data. This is something I think people inherently understand and think about.

Insurance companies have a lot of private data. They know how many people they're insuring. They know how many of those people are getting into accidents, what kinds of accidents, how long it takes someone to get into an accident, etc. Which I think is not generally public information, so they're able to leverage that data in really beneficial ways — again, some people would say maybe exploitative ways, but we'll stick with beneficial ways — for society and for technology.

I think that insurance companies have a very important role in that context. There is a lot of fear — not a lot fear, but there are some worries to consider. A lot of people, when we talk about AI and insurance will talk about the flip side of our conversation right now. They're talking about how AI can help insurance companies in underwriting and detecting fraud and gathering information faster and stuff like that. Because AI has the ability to sift through the information and give you specific models to calculate risks. The whole point of AI is giving recommendation of what's going to happen, and insurance companies can and are using AI as a tool to make their pipeline more efficient, allegedly.

Obviously AI has a lot of problems of bias, discrimination, and privacy issues as well and stuff like that. That is problematic. And we need to consider those things when we see insurance companies using data.

I will say that insurance companies have been kind of discriminatory even before we know from data. Males age 21 will get into more accidents than female drivers, and usually they will pay higher premiums because of that. A lot of people will claim this is discrimination, but this is just how the model is working.

And if we want to spread the risk and make sure that it's spread across a big enough pool, which is the essence of insurance — a big enough pool is absorbing the cost for someone specific who needs to pay a lot of money in that specific scenario — and we think this model works then these kind of differences are jus part of the system.

I always give my students an example of females going to get a haircut. They will pay more. And that's something that we don't consider as discrimination. It's just like features that are taken into consideration that might make some sense. But when it comes to the insurance industry, it's kind of annoying, and I completely understand that. But the models work, and the models are the thing that allows them to spread the risk in a way that we enjoy from it, because if there's some sort of percentages that I will suffer an accident and lose a lot of money, and I can pay a specific sum each month in the form of a premium to make sure that when that happens, someone will help me, most of us are doing this.

I know this is mandatory in the automobile industry, but think about health insurance as you travel abroad. Think about diving insurance, scuba diving, or skydiving or skiing. You need insurance in place when you do those activities, because something bad might happen. Even pet insurance. People are using it, people are taking it, even if it's not mandatory. And that's our risk averse nature. We want to pay small sums of money right now to make sure that if something really bad happens down the road, someone will be there to support us. Hopefully not in a bad, manipulative way that just declines all our claims, but in an actual way that makes society better.

A lot of people are comparing having insurance to vaccination. The more people who are vaccinated, the more that we as a society are safer against getting diseases. The more people that have insurance, the better protected we are or have some sort of risk hedging mechanism to make sure that if bad things happen it doesn't fall back on the government and the taxpayers, and they don't have enough money to help us, but it falls on the insurance system, the reinsurance system, like mechanisms that have enough money because they are gathering our premiums to help when bad stuff happens.

And that's how they publish themselves as well. So hopefully it's not a lie.

The fourth advantage you talk about is that there's a causal chain that has to be proven with tort law, usually, which can get very difficult in some cases, even in kind of typical court cases, and can be exacerbated when we're talking about something like AI that has a black box model. Insurance is able to circumvent some of those causal chain difficulties that are necessary in law and help protect people without raising philosophical and other kinds of legal questions.

So proximate causation and actual causation are extremely important. We all know that. And when we talk about negligence more generally, in order to establish a cause of action in the form of negligence and even strict liability, we need to make sure that there's a causal connection, a causal link between the actual harm that happened and the damage that occurred at the end. Because of the black box in the middle — that we don't really understand the decision making process of the AI and the proxies that it used to make those decisions that led to the accident — it's really hard to say, yes, this is the “but for” case, or this was foreseeable.

So foreseeability is a very big thing with tort law. The insurance industry offers a bypass. As you said, it doesn't solve the philosophical question of, was there a casual link? But it puts the goal of compensation and making sure that people will be compensated for their loss front and center, regardless of if they can point to a specific entity that is to blame.

We as humans need as a primal urge to make sure that someone is held accountable. But with AI, it's going to be extremely difficult, at least in the beginning, to find a human entity behind the AI that can be held liable. Because of the fact that a lot of companies will say, “I did not expect this, this was not foreseeable. I gave the machine a very specific program or prompt, and in the end, it reached its own conclusion.” And that's a big issue that a lot of companies are saying right now. And it puts torts under a lot of stress.

So when it comes to insurance, usually we do want a casual connection, casual link, to find some sort of liability. But if the policyholder has a policy and they was hurt or damaged, and it was not malice or some sort of fraud, he or she should get paid their compensation according to the policy. In that way, as I mentioned, we are bypassing this philosophical issue that tort scholars are fighting about: whether negligence or strict liability apply, both of them obviously require some sort of proximate causation.

So we're not really bypassing this either way, and we'll see what the court system will have to say about this when it actually gets there. But right now, if we want to make sure people are compensated while the technology is out there, and we think this technology is important enough to put it out there, even though people are suffering, we need to have some sort of a compensation mechanism. And insurance companies, I think they're just very well suited to actually do it because the infrastructure is already there.

What does the black box model of AI, how does that impact insurance companies, if at all?

So it does impact them, because insurance companies need to know the predictable scope of a damage and what is the probability of some sort of an event happening. And the probability times the scope will probably lead them to some sort of a premium that they can charge us. So if we don't have the probability nor the scope, because it's a black box, we don't really know the types of damages it's going to lead or the scope of the damages.

A lot of people talk about AI as an existential threat. It's going to destroy the planet, or at least parts of it. So when it comes to this scenario, the insurance industry has a challenge in creating some sort of a policy that actually allows them to offer coverage and not lose all their money as a result of a lot of policies being triggered at once or something that they did not expect, and then they will have to pay enormous sums.

Usually that's not a real issue because most of the policies have some sort of a cap, so they will pay until a certain amount and that will be sufficient, but they just lack the ability to accurately calculate a premium that will make sure that they will stay in the game and they will not go bankrupt as a result. And so there's a little bit of a fear in that sense of the black box also from the insurance industry. But because they have an incentive to make money, they will just probably offer higher premiums.

And as I said, more information they have about, and the more models they can develop to accurately predict the scope and probability of a given AI related harm, the better they'll be, the better policies we'll have. We saw this cycle a little bit with cyber insurance. Cyber insurance is not a good tool at the moment.

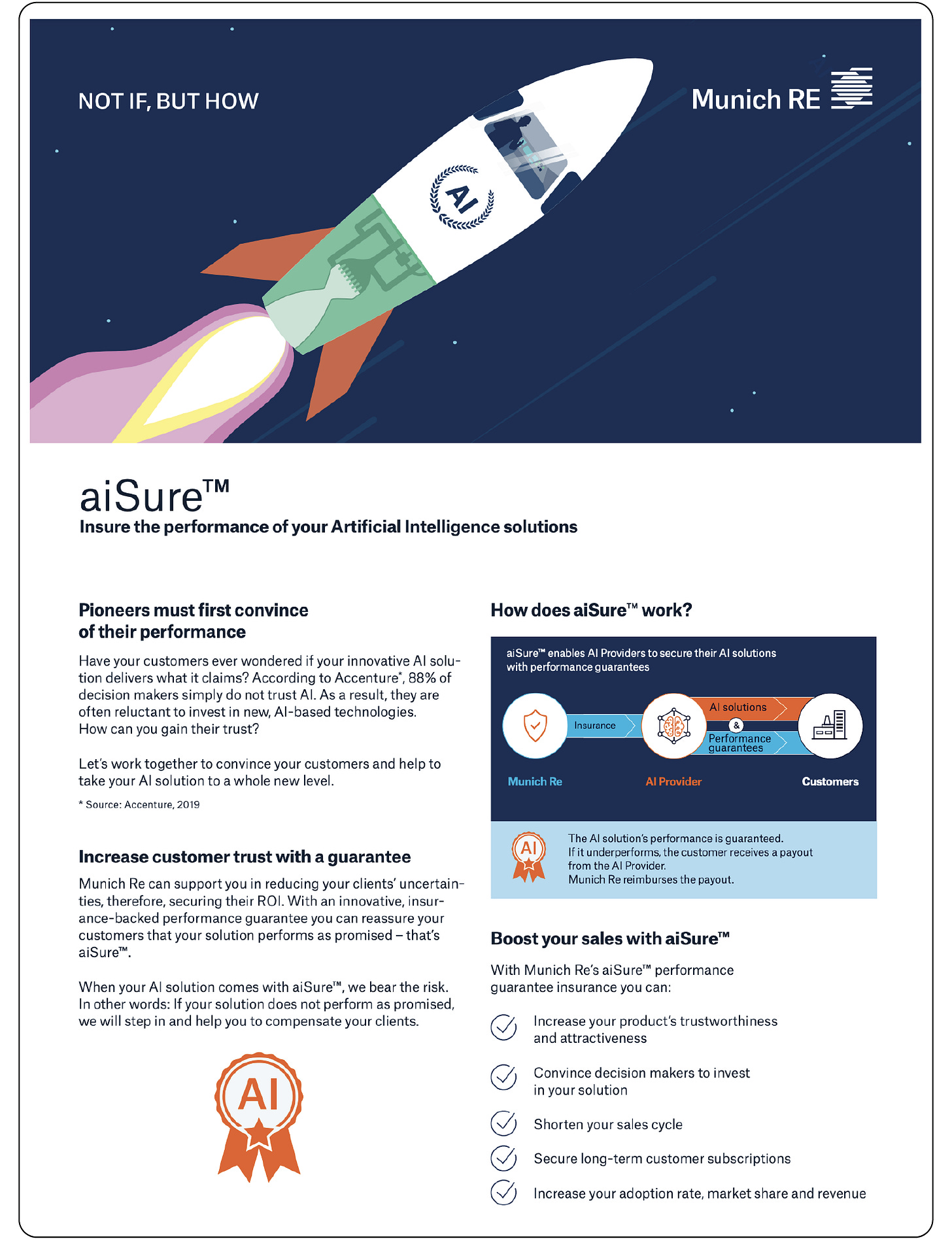

It has a lot of problems, a lot of defects. The first insurers who offered it did not predict ransomware attacks, which are the big things right now. And they eventually went bankrupt because they gave low premium and they didn't have any specific exclusion. So that happened. And I don't think it will be the same with AI. And I don't think that we will have a specific AI insurance products, even though there are companies like Munich Re that are working on this.

But the bottom line is that they do have an incentive. And the first policies will just be very, very strict until they will know enough to give you us policies that will actually be worth our time.

You mentioned some cyber insurance companies went out of business. How are those, the insurance companies that are still remaining that are offering cyber insurance, how are they responding to ransomware? Are they just raising their premiums or….?

That's a great question. I don't know enough about this industry to give you a detailed answer, but my intuition is that they are excluding some kinds of ransomeware and creating caps to make sure that even if they do offer coverage, they won’t go bankrupt. I assume they do offer ransomeware coverage, because otherwise, cyber insurance without ransomware attack protection is kind of meaningless. Because that's the most risky thing, but they do have a lot of exclusions put into it.

I wanted to shift and talk specifically about autonomous vehicles for a little bit. This is something you talk about in your paper as well. Before we dive in, can you give us an overview of first party insurance and third party insurance? Because I think today most people with automobile insurance are most familiar with first party insurance, which is I'm a driver, I'm going to buy insurance for myself to protect myself if someone hits me.

But there are other types of insurance that might make more sense, or at least that people have proposed for autonomous vehicles that involve other people or other entities taking on insurance. So talk a little about that.

Sure. So, as you said, the automobile is the most intuitive example of first party insurance. So if I drive and something happens to me, the first party policy will cover any related damage to myself or my property.

The second party is the insurance companies. That's why we don't have second party insurance, because they're always the second party and no one really talks about them.

The third party policy talks about me causing damages to anyone else but myself or my property. So if we're talking about driving a car, hitting a pedestrian, hitting a bus, hitting another car, the type of policy that will protect me from having to pay to that third party is the third-party policy.

If we shift a little bit, zoom out from the traditional policies that we know today, that we as drivers are the ones that buy the policies, the rationale for that is the fact that we are driving the car. We have the ability to control the risk, allegedly. Some things we cannot control, like the infrastructure, if there's a bump in the road or a street light is not working or stuff like that. But the majority of advice — don't drink while you drive, don't drive while you're sleepy, don't speed — are all things that we as drivers have the ability to control. And insurance companies have the ability to nudge us to do it, because as most of us know, if we get into an accident, something bad happens we activate the insurance company saying that there was an accident and we triggered the policy. Then we'll probably pay more, the premiums will go up, the coverage will change.

And that's one of the mechanisms that insurance companies have in place to prevent us from doing whatever we want. So this connects us to one of the negatives, which maybe we'll talk about later, but I think it's connected to what I'm talking about right now, moral hazard.

So moral hazard is something that is very much embedded into insurance. Moral hazard is a concept that says, I have someone protecting me, I have a backup. So because I know someone will eventually pick up the check, I have no skin in the game. I don't care. I can do whatever I want. Sort of like a toddler doing whatever they want because they know the parents will take the blame because no one can hold them accountable for anything.

And insurance companies were really afraid of this until the 18th century. Third party policies were against public policy. They were illegal, because technically we had no underlying agenda, no underlying purpose in the third party. And in that sense, we have no incentive to protect them. So we can take that insurance policy and just defraud people. For example, when we talk about life insurance, there have been a lot of examples, horrible examples, of, like, foreign people taking life insurance over a third party. The foreign people have nothing to do with this individual, but they put a policy in place, and then kill the individual or make sure that they die in order to collect the insurance payout.

If I don't have an underlying objective, a third-party policy can be against public policy and can be something that is very dangerous. That kind of shifted over time, knowing insurance companies gathered mechanisms to make sure that we as policyholders have skin in the game. And that's something that we are seeing right now with us driving. So we will put on our seat belts, we will make sure we're driving with a car that have airbags, we have anti-theft devices, because if we don't, there's a good chance that it's excluded from the policy and all types of mechanisms that insurance companies are using to nudge us as the policyholder and the driver to be better.

But once I am not driving the car, the driver is not driving, there's not even a seat or a wheel. How can we expect the driver, the policyholder, the owner of the car, to be nudged by the policy if they have no control over the safety mechanism they can implement or the way that they can drive or stuff like that? In that sense, the discussion is shifting from obligating us as drivers to buy policy to saying, look, these manufacturers, the people who are creating the autonomous vehicles, have the better ability right now to make sure that the car we're using is safer. The autonomous vehicle we're using, it's safe. They can control the software, they can update it from afar. They just press a button and everything is updated in my system.

So there's a talk about a shift of saying these big companies, Uber, Google, LG, whoever is creating cars right now, should purchase third-party policies, and that will be mandatory whenever they wish to use their cars. And whether it's like, by selling it to us or using it as a fleet, and they’re sending it to us upon request, just like Uber is doing right now. So that shift is ongoing in the context of autonomous vehicles and saying that the companies should now be the entity responsible for purchasing coverage because they are in the best pressure point. They are in the best position in order to make sure that the technology is safer. We as users, as drivers currently, if we imagine a future when we don't have any capability to control the car, we're just sitting in it. There's nothing we can do.

So the mechanism of preventing moral hazards that were implemented in the policies that we currently have are just gone. And that's something that can be scary if we stick to the traditional model we have right now.

Yea, you talk a lot in your paper about risk and risk reduction. So the idea that if I have first-party insurance as a driver, I'm in the best position to be able to lower my premiums with the insurance company because I can reduce my risk by driving more safely, not speeding, yada, yada, yada. I think with some insurance companies, you can even install a monitoring device in your car so the insurance company has that data and can verify that you're a safe driver.

But once I'm not driving, there's no point in me having an insurance policy because I'm not in a position to reduce the risk of the car. The car is driving itself. As you were saying, I'm just a passenger. So someone, I guess, has to take on the risk or is in a better position to take on and reduce the risk.

And in that case, it becomes someone like the autonomous vehicle manufacturer because they can put in all kinds of mechanisms and technology and monitoring systems to reduce the risk, and then they themselves will get lower premiums from the insurance company that is insuring them.

Yeah. And the example you gave about having a lot of mechanism and devices that you could currently put in your car — they monitor if you're awake, you're asleep, your speed — affects your premium. Insurance companies are trying to sell it to you right now as a means to make sure that you're proactively mitigating or lowering in some way the risk associated with your driving. Just like health insurance trying to sell you a gym membership. Other people say this is undignified and a privacy problem, but from cost-benefit analysis, it can prevent costs, a lot of damages, but being constantly monitored is a cost of its own that it's like an external, negative externalities that we're not really talking about.

But that's in the scope of our conversation today, but also somewhat not really in the scope.

It's profit maximizing from the insurance company's point of view. If they can have you take certain actions and verify that you've taken them, because they know you're a lower risk and it gives them more data to calculate the next model.

Yeah.

Let's talk about some of the specific proposals for autonomous vehicles. You outline six of them in your paper. We can go through them quickly, and if you have anything to add or any special call outs, we can do that. The first one is called the Turing Registry. I think this is named after the computer science professor Alan Turing, which is a pretty interesting proposal.

Is there anything you want to call out in particular? Tell us a little bit about that registry.

So this is a registry offered in 1996, so long before AI exploded. And the offer is simply to make sure that every time there's an AI going out there in the world to be used by humans, it is being registered, and the registration gives them some sort of an effective protection.

In that way, it's a little bit like an FDA for algorithms, you examine the algorithm before you use it, and then you can put it in the market after it's been approved. I think there's a couple of issues with this.

The main issue is just defining “AI.” It's not a broader challenge not only related to the Turning Registry. I think most people think that everything is AI at the moment, even if it's just like automation or something that can make simple calculations, that can put a lot of stress on that administrative agency that will need to be created in order to create this registry. Again, I have no idea how it will be created, where the money will come from, or who has the expertise to sit down and go over the algorithm and make sure that they're safe.

We actually need people to understand the algorithms, and that can also be challenging given the black box that we talked about. The velocity of AI safety mechanisms that can be implemented is changing so fast, though, that even if you give approval, it can be meaningless in like a month, and then the algorithm needs to come back and get another reapproval in order to go back and be considered safe again.

So I think this is fascinating because this was created far before people actually thought about AI causing a lot of damages. But I don't know if logistically it can happen, especially because there is someone who needs to go over the algorithm itself and say, “Yes, this is safe.” I have no idea if there is anyone out there that has the ability to actually be that confident in saying that.

Another issue you wonder about is whether an insurance company would be willing to participate in this program. Because if there's a single general registry of everything that's “AI,” as you were saying, that could include, I mean, so many products, you know. We have like smart toasters and things now that someone might classify as AI. And so the list of products is close to infinite.

And insurance companies would be, I guess, “happier,” we could say, if instead of a single registry, there were maybe multiple registries for different categories of AI, because that would allow insurance companies to use their expertise and their data about narrow product categories to make better decisions about premiums and things like that.

Yeah. To spread the risk in a more efficient way. I mean, imagine all insurance policies for everyone were in the same bucket, then you would suffer a lot of the spreading. You need to create narrower pools to make sure you have high risk and low risk together to prevent this. If you put everyone together, the mechanism will not work. The same applies to AI.

The next idea, the second idea you talk about is in-house insurance, which I think you mentioned is how Tesla insures today, which I did not know, talk a little bit about how that insurance scheme works.

So this is kind of popular because, and it makes sense, we have like, autonomous vehicles that people are afraid of. A recent Stanford University research study showed that only like 27% of people actually feel comfortable going on autonomous vehicle, right now. I'm not sure if something that's, like, creepy about it or just actually being scared of not having a human driver driving, even though human drivers are also extremely scary. And we know that 94% of accidents are caused because of human error. So, you know it's a very intuitive kind of thing being afraid of autonomous vehicles, not necessarily based on statistics.

So big companies who want to push their products into the market and know that people are afraid of malfunctions will say, “Don't worry, I know insurance companies are not taking this yet, but if something bad will happen, I will cover everything.” And that's like an in-house policy that is being sold to you with the car itself.

And so Tesla does this today. Like, if I have a Tesla and I get into an accident, Tesla will cover the costs?

So that was true in 2018, when I looked at it, I think it's still true. I haven't seen anything that changed that. And we see Teslas are getting in a lot of accidents, so I think that's still the case.

I do know that Microsoft and OpenAI have something similar. They’ve said that if companies use their text-to-image generation models and end up violating copyright, that they will pay for copyright claims.

They know that it's going to be very hard to prove copyright infringement. And we're seeing this right now with the court cases that are being rejected in the court. But yes, that's interesting. Again, this is a mean of saying “we are so good, and even if we're bad, we got you.” So it's a good marketing scheme.

As you talked about earlier, if you read the terms of service, there are obviously exclusions to that. But it's an interesting idea.

The third set of proposals you talk about are a couple of laws. One is in the UK and one is in Germany. So the UK law is called the Automated and Electric Vehicles Act. And the Germany one is called the Road Traffic Act and Compulsory Insurance Act - Act on Autonomous Driving. These are both compulsory insurance schemes for autonomous vehicles, is that right?

Yes. And the UK just kind of adopted the traditional model of let's continue for the driver to be deposit holder. And it got a lot of pushback saying that insurance companies need the foothold of the automobile industry because that's how they bundle everything up. We see this with insurance companies. Usually you have to get automobile insurance, so you'll use that company to get pet insurance, health insurance, travel insurance, home insurance, and that's the way they get you. That's the way they get into your house. And if they do not have that requirement anymore to get the automobile insurance in place, then they will lose a lot of money.

So that was a major pushback against this act, saying it just feeds into the insurance industry and their power to control it, which I think makes sense because we said that if we have no control over the car anymore, then us being responsible for purchasing a policy from a nudging, incentivizing perspective makes no sense anymore. And that's a big part of the insurance industry and how it can help implementing this technology in a safer manner. People did not like those acts.

Interesting. Okay. The fourth proposal you talk about is Manufacturer Enterprise Responsibility, abbreviated MER.

So this offer was created by two scholars, Abraham and Rabin, and they suggested that once 25% of all registered vehicles will be autonomous vehicles, then the auto manufacturers will become responsible for all injuries arising out of the operation of these vehicles. And then they're saying that this will replace the tort system and will focus on bodily injuries and not property in that sense. And that will be the only option. Drivers who get injured will have to sue through this MER proposal, which is focus on the manufacturing side.

So this is kind of what we were talking about earlier, instead of the shift being voluntary, it's requiring a shift from driver liability to manufacturer liability for autonomous vehicles. Is that right?

Yeah. From the responsibility of buying the policy? Yes.

It's very meticulous, very specific, which I think is really admirable. I don't like the exclusion of property damages because everything comes with property damages, and that's a lot. And also making it a standalone solution without the tort system as a supplementary mechanism can be maybe too extreme.

But it all depends on the volume of claims. I mean, the creation of technologies with trains and subways, for example, created a lot of damages in urbanized areas. And then the court system, the amount of suits just doubled or tripled. And then there was a lot of stress in the court system, if that's what they predict and we want to make sure that the court system will not collapse, that's okay. But I don't think that will be the case.

I mean, I don't think that because of autonomous vehicles, there will be a lot more suits and then the court and the tort system cannot handle it. I think the tort system should be available for gross negligence or stuff that, and for other cases that we want to make sure that the manufacturers have an incentive to fix. Because if we say that the manufacturers will pay a portion of whatever their income is or something like that, and then they will pay for it, they don't have an incentive to make their technology better because they're already paying.

So either way, money comes out of their pockets. So the incentive program and structure here can be problematic.

There's another entity that's also brought into the conversation with this proposal, as I understand it, which is the idea that autonomous vehicles might not be privately owned, but rather owned by a company like Uber, let's say.

So Uber would buy a fleet of autonomous vehicles from a manufacturer, and then you wouldn't own a car. If you needed to drive, you would summon a car. It would autonomously drive to you and take you to where you need to go. And so maybe it's Uber or the fleet owner that should have the insurance policy rather than the driver or the manufacturer.

That's a very important point, because the ownership structure of these AV's in the future will dictate the way the insurance should be structured, well not necessarily dictate, but will shift the way that the traditional policies are currently being created. Because if it's a fleet situation and we don't even own a car, we just use it per situation, which makes sense, given organization and lack of parking, then the shift to manufacturers providing a service makes a lot of sense.

If we're owning the car, maybe we should owe some responsibility in that context. And then people will say different things. But again, if we own the car, but have no ability to take proactive measures as we drive it, maybe we can make other measures as we store it or take care of it, and then we can have a skin in the game and a part of the responsibility.

But otherwise, there should be some sort of a balance that the traditional system does not necessarily offer. I mean, that requires making tweaks in it, not necessarily completely changing it.

And the 5th and 6th proposals are both basically national insurance funds or a European-wide insurance fund, which I guess would be supported by tax dollars either from drivers or from vehicle manufacturers. And then if there's some kind of an accident, maybe this would kick in. If it's catastrophic, then funds would be paid from these national funds.

Is that right?

Yes. I mean, the European fund, it was a proposal that never happened. There was one section that proposed AI having personhood. And that took all the focus. And then Section 59 was about insurance. And no one talked about it.

And so I give the specific subsections in the article itself, and they give a couple of options of what we can do with insurance and how we can utilize insurance. So if we have a national insurance fund, we will take some sort of a percentage from the users as well as the manufacturers. Usually the manufacturers will pay more. As we said, they have more control over the risks and more ability to make them safer. And then we just use the fund to pay whenever bad things happen.

We're almost out of time. Let's close just by talking about what we should be looking out for. Is there any recent advances in AI insurance that we should be on the lookout for? We've been talking about autonomous vehicles.

I don't know how Generative AI models play into that, but what should we kind of expect to see coming from insurance companies in this space?

That will actually be my next project, talking with insurance companies and seeing what they're thinking about this. So hopefully next time when I talk to you, I'll have very detailed information about that.

But right now, my intuition is, as I mentioned, I was in a panel with someone from Munich Re that are already offering policies to small and medium sized companies working with AI to protect risk associated with them. So that's unlike what we're talking about here which has been to use the insurance infrastructure as it exists today and just build upon that to cover AI damages. Munich Re is saying, let's do what we did with cyber insurance and create a specific policy to cover this.

And they're working in this field. They're kind of making progress. They're the only one doing it right now.

Again, I assume they have some exclusions and the model is not perfect, but they are offering these specific policies. I expect that more companies will not necessarily create their own AI product, but they will definitely look deeper into how AI is influencing their current traditional policies that they're offering, similar to how cyber insurance eventually led with a slow exclusion from traditional policies to the creation of cyber insurance.

Because AI is supposed to be cooperating with us rather than replacing us in the near future, in my opinion, I don't see a situation where we need an AI policy on its own. We just need to tweak what we already have with current policies.

If we're talking about, and I mentioned this malpractice, or offices and officer and directors, wherever a decision is being made by AI, maybe we should think about who should be held liable or redistribute the blame in some way to bring the manufacturer of AI into the picture in some way, but not completely alter how we are using insurance or how the policies are currently built in the future when we have maybe an existential threat or a personhood for AI. And my article also talks about the singularity and what will happen then. And maybe AI entities will own their own policies. It's plausible, but it's kind of far off from us.

So right now, I assume more companies, seeing how lucrative this is, will offer more policies, but they will do it in a more nuanced and safe manner. Learning from the mistakes of cyber insurance and what happened there.

Anat Lior, thank you so much for being on the podcast.

Thank you so much for having me.